We’ve recently been considering the field of linguistics more at ContactEngine: what it is, why we should care, and how we can use it to our advantage. I talked briefly in my previous post about the way language is medium-dependent and context-dependent – it varies hugely, depending on a limitless number of factors. I’m sure I’ll go into this more in the future, as the field is vast and exciting. For now, the part I want to focus on, because it’s something on which we rely so completely at ContactEngine, is the relatively new but fast-growing area of human-computer interaction (HCI), and how this relates to linguistics.

The services we provide at ContactEngine are based fundamentally on communications between humans and computers. We want to make our customer journeys smooth and streamlined, but we conduct thousands – hundreds of thousands! – of journeys every day. The sheer number of customers we engage in conversation with necessarily means these have to be managed automatically by sophisticated software.

There’s a great deal of literature in the field of HCI about the design of programmes and interfaces for maximum usability and functionality, so that humans can best interact with them. I’m going to leave that topic to the professional nerds (thank you nerds, you do great work). Instead, the area I’m looking at is the part most relevant here: the linguistic interactions between ContactEngine’s computer-managed communications and our human customers.

In order to create perfect customer journeys, we want customers firstly to respond to our messages – by voice, text, email, or any other way – and secondly to give the responses we want. Take, for example, the medium of text messaging. I’ve been analysing various responses from our journeys, which might, for example, ask customers to confirm they can keep their appointment or set a date for a new one. At the moment, our messages include prescribed answers, to make it clear what response we’re after, for example: “reply YES if you can make the appointment or NEW to reschedule”. These, and any similar responses, are easily processed by our AI system, so the whole process can be automated.

The interesting part, I’ve found, isn’t when this functions perfectly – people obediently reply as requested, the system flows smoothly, and there’s no further need for communication. Wonderful though this is for business purposes, more notable for me are the occasional messages that wildly deviate from the prescribed responses, to the extent that our AI system can’t understand them at all.

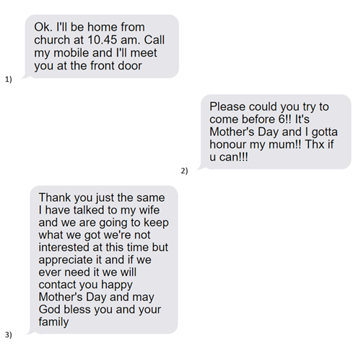

Here are some examples:

I love responses like these. The extra information, although often far more than we really need or want, is delightful – it provides a brief, charming window into a person’s life, with details that make them so human. Instead of merely stating that they’ll be available from 10.45, the person in 1) explains why: they’ll be at church! Instead of just asking to reschedule, the person in 2) writes an excitable message explaining the request – you can feel their love for their mother in every exclamation mark. Instead of simply replying NO to show they are not interested – look how lovely the person in 3) is!

Back to the point though: the takeaway message here is that people are writing back to our automated system as though they are writing to a human. It seems very likely that in many cases, people aren’t actually aware that they are interacting with a computer at all.

This has a nuanced impact in terms of creating our messages. As part of our linguistic analysis, we’ve been considering how to write messages in order to get the best response by looking into, for example, optimum levels of friendliness, politeness and formality.

However, we also have to consider this in terms of HCI. How human-like or computer-like do we want our messages to be? If we’re too friendly and informal, will people be more likely to think they are texting a person rather than a computer and provide loads of extra info we don’t need and can’t parse? If we’re overly formulaic and abrupt, thus making it obvious our messages are automated, are people going to be less likely to respond? Perhaps there’s a trade-off between getting a high response rate but rambling messages, and getting good, formulaic responses but fewer of them.

To move this discussion from the hypothetical to the provable, we’re continuously conducting linguistic testing on ContactEngine’s customer journeys. This testing will help us understand the impact of HCI and as such, identify an optimum level of human-ness for our journeys. Then we can tailor journeys linguistically to improve the process for our customers and ourselves.

In general, this new boundary between human- and computer-interaction is something we have to think about as technology becomes ever more advanced. But leaving aside for a moment the complex area of the ethics of ‘intelligent’ technology (a blog post for another day), the question we’re asking is this: how are we, as humans, responding to automated computer systems, and how can we, as a business, use this carefully, responsibly and to our advantage?